User’s Guide

Spatialize an audio source

To spatialize an Audio component:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

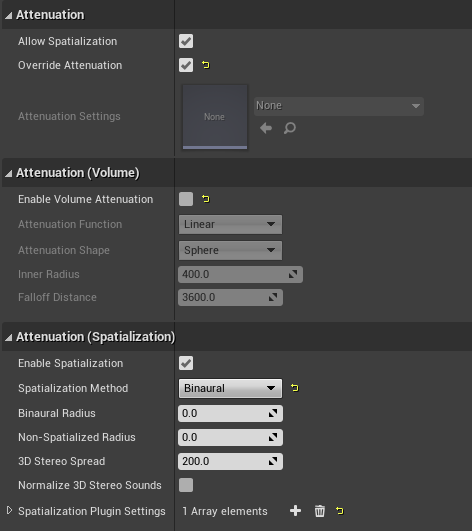

Under Attenuation, check Override Attenuation.

Under Attenuation (Spatialization), set Spatialization Method to Binaural.

Steam Audio will apply HRTF-based binaural rendering to the Audio Source, using default settings. You can control many properties of the spatialization using the Steam Audio Source component and Steam Audio Spatialization Settings asset.

Apply distance falloff

You can continue to use Unreal’s built-in tools to control how the volume of an audio source falls off with distance. To do so:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

Under Attenuation (Volume), check Enable Volume Attenuation.

Use the Attenuation Function, Attenuation Shape, Inner Radius, and Falloff Distance to control the distance falloff. Refer to the Unreal documentation for more information.

Steam Audio will automatically use this curve to apply distance attenuation.

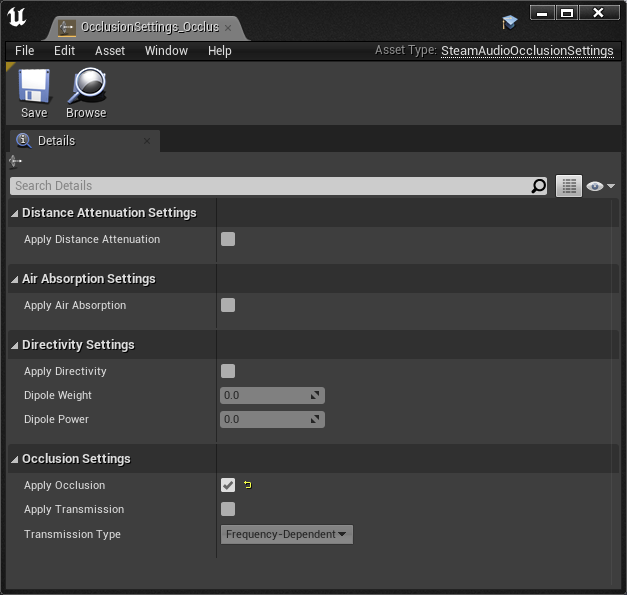

Apply frequency-dependent air absorption

You can control how different sound frequencies fall off with distance. For example, when playing a distant explosion sound, higher frequencies can fall off more than lower frequencies, leading to a more muffled sound. To do this:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

In the Details tab, under Attenuation (Occlusion), check Occlusion.

Click the + icon next to Occlusion Plugin Settings, then use the drop-down that appears to select an existing Occlusion Plugin Settings asset, or create a new one. Skip this step if a Occlusion Plugin Settings asset has already been set for this Audio component.

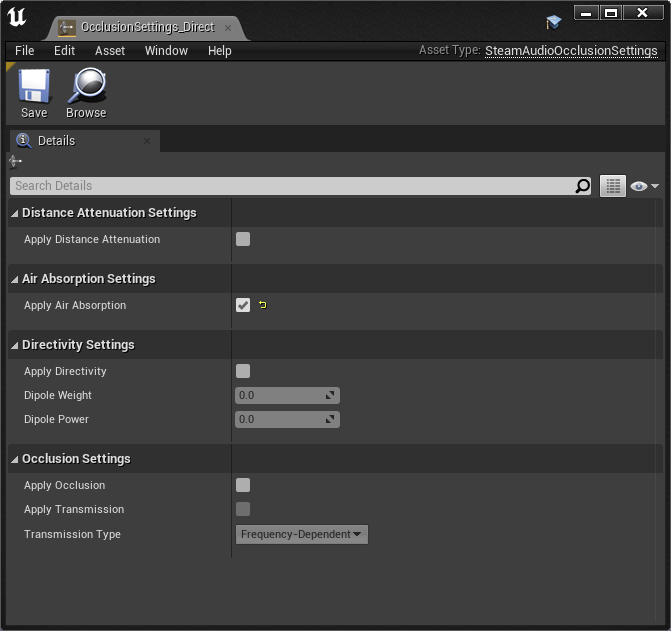

Open the Occlusion Plugin Settings asset associated with the Audio component.

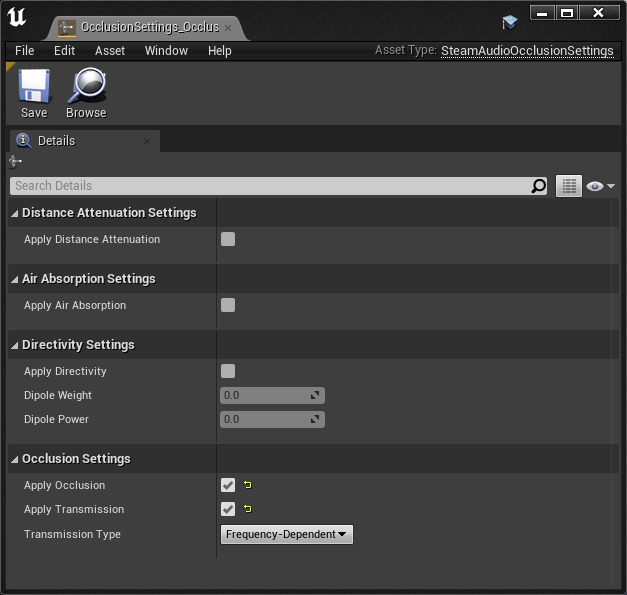

Under Air Absorption Settings, check Apply Air Absorption.

Steam Audio will now use its default air absorption model to apply frequency-dependent distance falloff to the Audio component.

Specify a source directivity pattern

You can configure a source that emits sound with different intensities in different directions. For example, a megaphone that projects sound mostly to the front. To do this:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

In the Details tab, under Attenuation (Occlusion), check Occlusion.

Click the + icon next to Occlusion Plugin Settings, then use the drop-down that appears to select an existing Occlusion Plugin Settings asset, or create a new one. Skip this step if a Occlusion Plugin Settings asset has already been set for this Audio component.

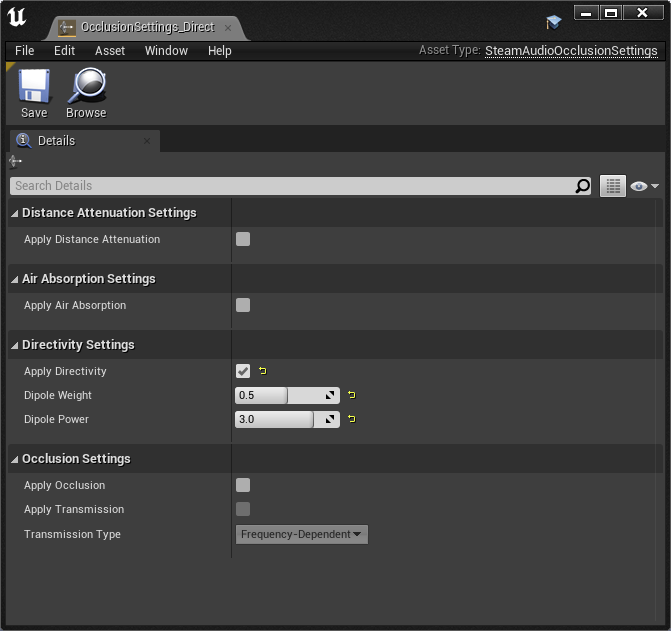

Open the Occlusion Plugin Settings asset associated with the Audio component.

Under Directivity Settings, check Apply Directivity.

Use the Dipole Weight and Dipole Power sliders to control the directivity pattern.

Note

If a Steam Audio Source component is attached to the actor, then selecting the actor will cause a visualization of the directivity pattern to be displayed in the viewport.

For more information, see Steam Audio Occlusion Settings.

Use a custom HRTF

You (or your players) can replace Steam Audio’s built-in HRTF with any HRTF of your choosing. This is useful for comparing different HRTF databases, measurement or simulation techniques, or even allowing players to use a preferred HRTF with your game or app.

Steam Audio loads custom HRTFs from SOFA files. These files have a .sofa extension.

Note

The Spatially-Oriented Format for Acoustics (SOFA) file format is defined by an Audio Engineering Society (AES) standard. For more details, click here.

To tell Steam Audio to load a SOFA file at startup:

In the Content Browser, click Add/Import > Import To …, then navigate to the SOFA file you want to import and click Open.

In the main menu, click Edit > Project Settings, then select Steam Audio from the list on the left.

Under Custom HRTF Settings, click the dropdown next to SOFA File, then select the SOFA file you imported.

HRTFs loaded from SOFA files affect direct and indirect sound generated by audio sources, as well as reverb.

Warning

The SOFA file format allows for very flexible ways of defining HRTFs, but Steam Audio only supports a restricted subset. The following restrictions apply (for more information, including definitions of the terms below, click here):

SOFA files must use the

SimpleFreeFieldHRIRconvention.The

Data.SamplingRatevariable may be specified only once, and may contain only a single value. Steam Audio will automatically resample the HRTF data to the user’s output sampling rate at run-time.The

SourcePositionvariable must be specified once for each measurement.Each source must have a single emitter, with

EmitterPositionset to[0 0 0].The

ListenerPositionvariable may be specified only once (and not once per measurement). Its value must be[0 0 0].The

ListenerViewvariable is optional. If specified, its value must be[1 0 0](in Cartesian coordinates) or[0 0 1](in spherical coordinates).The

ListenerUpvariable is optional. If specified, its value must be[0 0 1](in Cartesian coordinates) or[0 90 1](in spherical coordinates).The listener must have two receivers. The receiver positions are ignored.

The

Data.Delayvariable may be specified only once. Its value must be 0.

Tag acoustic geometry

You can use Steam Audio to model how your level geometry causes occlusion, reflection, reverb, and other effects on your audio sources. You start by tagging the actors that you want to use for acoustic calculations:

Select the actor you want to tag. It must be either an actor containing a Static Mesh component, or a Landscape actor.

In the Details tab, click Add Component > Steam Audio Geometry.

Not all objects have a noticeable influence on acoustics. For example, in a large hangar, the room itself obviously influences the acoustics. A small tin can on the floor, though, most likely doesn’t. But large amounts of small objects can collectively influence the acoustics. For example, while a single wooden crate might not influence the hangar reverb, large stacks of crates are likely to have some impact.

Note

You don’t need to create an additional mesh just to tag an object as Steam Audio Geometry. You can directly use the same meshes used for visual rendering.

Once you’ve tagged all the geometry in the level, export it:

In the toolbar, click Steam Audio > Export Static Geometry.

By default, all geometry is treated as static. In other words, you can’t move, deform, or otherwise change the geometry at run-time. If you need to do this, set it up as a dynamic object, as discussed in the next section.

You can simplify geometry before it’s exported, export multiple meshes with a single Steam Audio Geometry component, and more. For more information, see Steam Audio Geometry.

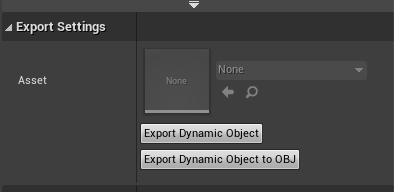

Set up dynamic (movable) geometry

You can mark specific actors as dynamic geometry, which tells Steam Audio to update occlusion, reverb, and other acoustic effects as the actor moves. To do this:

Select the actor you want to tag.

In the Details tab, click Add Component > Steam Audio Dynamic Object.

Attach Steam Audio Geometry components to the actor as needed.

On the Steam Audio Dynamic Object component, click Export Dynamic Object.

At run-time, any changes made to the transform of the actor to which the Steam Audio Dynamic Object component has been attached will automatically be passed to Steam Audio.

You can attach a Steam Audio Dynamic Object component in a Blueprint as well. After exporting it, the Blueprint can be freely instantiated in any level and moved around; Steam Audio will automatically update acoustic effects accordingly. For example, this can be used to create a door Blueprint that automatically occludes sound when added to any level, or large walls that a player can build, which automatically reflect sound.

Warning

Changes made to the transforms of children of the actor containing the Steam Audio Dynamic Object will not be passed to Steam Audio. The entire object and all its children must move/animate as a rigid body.

For more information, see Steam Audio Dynamic Object.

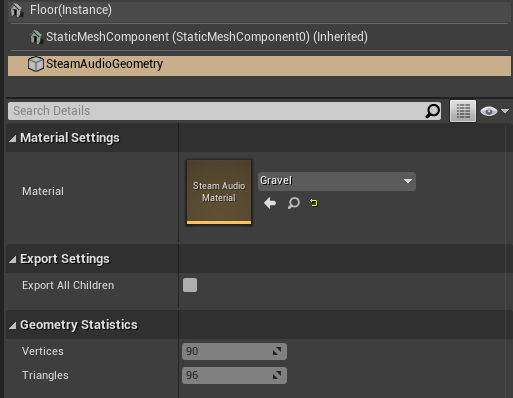

Associate an acoustic material with geometry

You can specify acoustic material properties for any actor that has a Steam Audio Geometry component. These properties control how the actor reflects, absorbs, and transmits sound, for different frequencies. To specify an acoustic material:

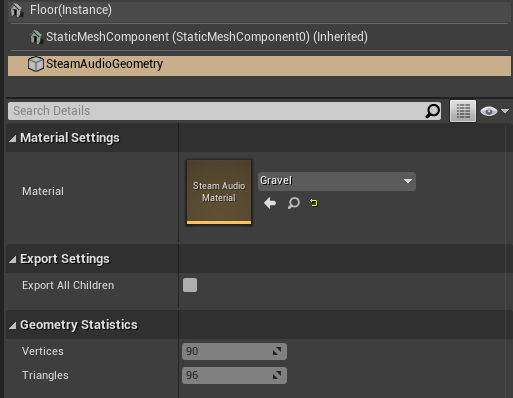

Select the actor containing the Steam Audio Geometry component, then click the Steam Audio Geometry component in the Details tab.

Set Material to a new or existing Steam Audio Material asset.

Steam Audio contains a small library of built-in materials (Plugins/SteamAudio/Content/), but you can create your own and reuse them across your project. To create a new material:

In the Content Browser, navigate to the directory where you want to create your material.

Click Add/Import > Sounds > Steam Audio > Steam Audio Material.

Give your new material a name, and configure its acoustic properties.

For more information on individual material properties, see Steam Audio Material.

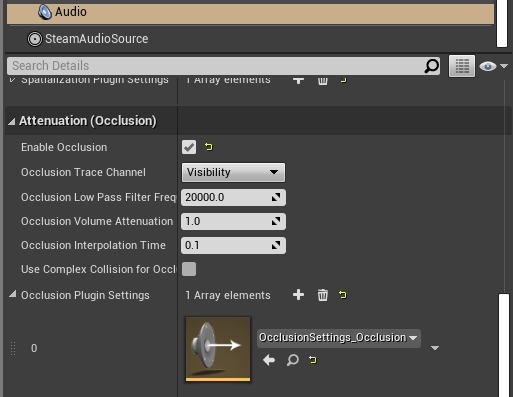

Model occlusion by geometry

You can configure an audio source to be occluded by scene geometry. To do this:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

In the Details tab, under Attenuation (Occlusion), check Enable Occlusion.

Click the + icon next to Occlusion Plugin Settings, then use the drop-down that appears to select an existing Occlusion Plugin Settings asset, or create a new one. Skip this step if a Occlusion Plugin Settings asset has already been set for this Audio component.

Open the Occlusion Plugin Settings asset associated with the Audio component.

Under Occlusion Settings, check Apply Occlusion.

Click Add Component > Steam Audio Source. Skip this step if a Steam Audio Source component is already attached to the actor.

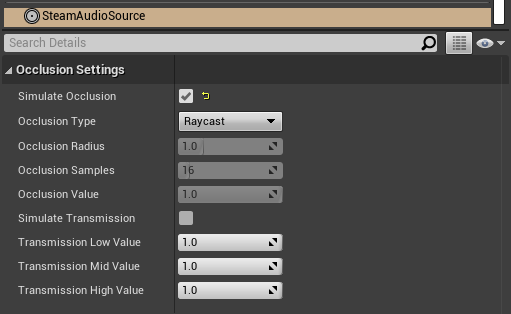

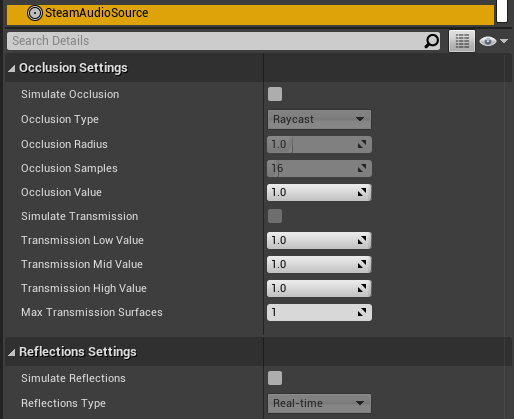

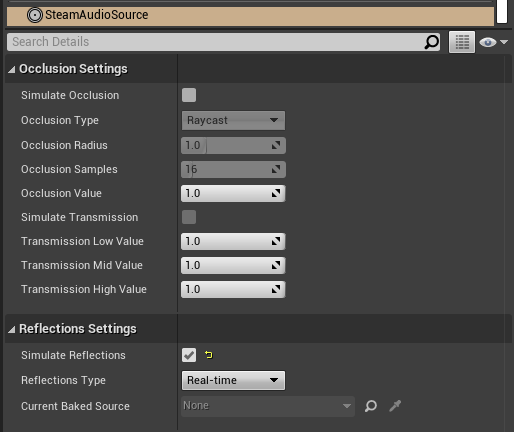

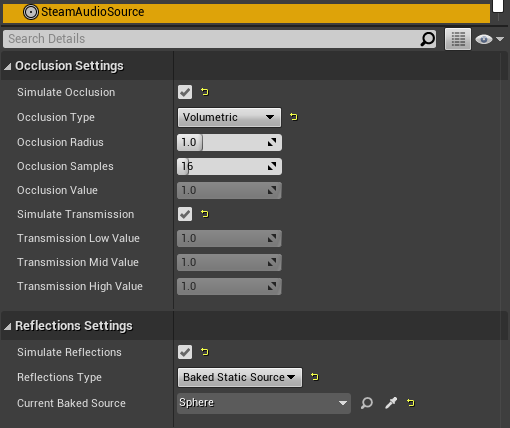

Under Occlusion Settings, check Simulate Occlusion.

Steam Audio will now use raycast occlusion to check if the source is occluded from the listener by any geometry. This assumes that the source is a single point. You can also model sources with larger spatial extent, or explicitly control occlusion manually or via scripting. For more information, see Steam Audio Source.

Model transmission through geometry

You can configure an audio source to be transmitted through occluding geometry, with the sound attenuated based on material properties. To do this:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

In the Details tab, under Attenuation (Occlusion), check Occlusion.

Click the + icon next to Occlusion Plugin Settings, then use the drop-down that appears to select an existing Occlusion Plugin Settings asset, or create a new one. Skip this step if a Occlusion Plugin Settings asset has already been set for this Audio component.

Open the Occlusion Plugin Settings asset associated with the Audio component.

Under Occlusion Settings, check Apply Transmission.

Click Add Component > Steam Audio Source. Skip this step if a Steam Audio Source component is already attached to the actor.

Under Occlusion Settings, check Simulate Occlusion and Simulate Transmission.

Steam Audio will now model how sound travels through occluding geometry, based on the acoustic material properties of the geometry. You can also control whether the transmission effect is frequency-dependent, or explicitly control transmission manually or via scripting. For more information, see Steam Audio Source.

Model reflection by geometry

You can configure an audio source to be reflected by surrounding geometry, with the reflected sound attenuated based on material properties. Reflections often enhance the sense of presence when used with spatial audio. To do this:

Select the actor containing the Audio component, then select the Audio component in the Details tab.

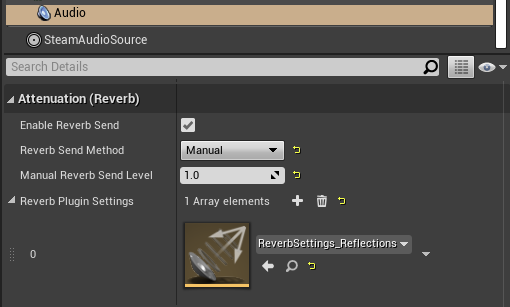

In the Details tab, under Attenuation (Reverb), check Enable Reverb Send.

Click the + icon next to Reverb Plugin Settings, then use the drop-down that appears to select an existing Reverb Plugin Settings asset, or create a new one. Skip this step if a Reverb Plugin Settings asset has already been set for this Audio component.

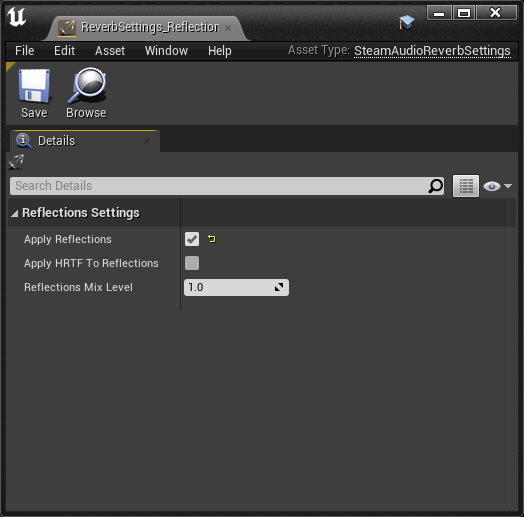

Open the Reverb Plugin Settings asset associated with the Audio component.

Under Reflections Settings, check Apply Reflections.

Select the actor containing the Audio component.

Click Add Component > Steam Audio Source. Skip this step if a Steam Audio Source component is already attached to the actor.

Under Reflections Settings, check Simulate Reflections.

Steam Audio will now use real-time ray tracing to model how sound is reflected by geometry, based on the acoustic material properties of the geometry. You can control many aspects of this process, including how many rays are traced, how many successive reflections are modeled, how reflected sound is rendered, and much more. Since modeling reflections is CPU-intensive, you can pre-compute reflections for a static sound source, or even offload the work to the GPU. For more information, see Steam Audio Source and Steam Audio Settings.

Simulate physics-based reverb at the listener position

You can also use ray tracing to automatically calculate physics-based reverb at the listener’s position. Physics-based reverbs are directional, which means they can model the direction from which a distant echo can be heard, and keep it consistent as the player looks around. Physics-based reverbs also model smooth transitions between different spaces in your level, which is crucial for maintaining immersion as the player moves. To set up physics-based reverb:

In the main menu, click Edit > Project Settings.

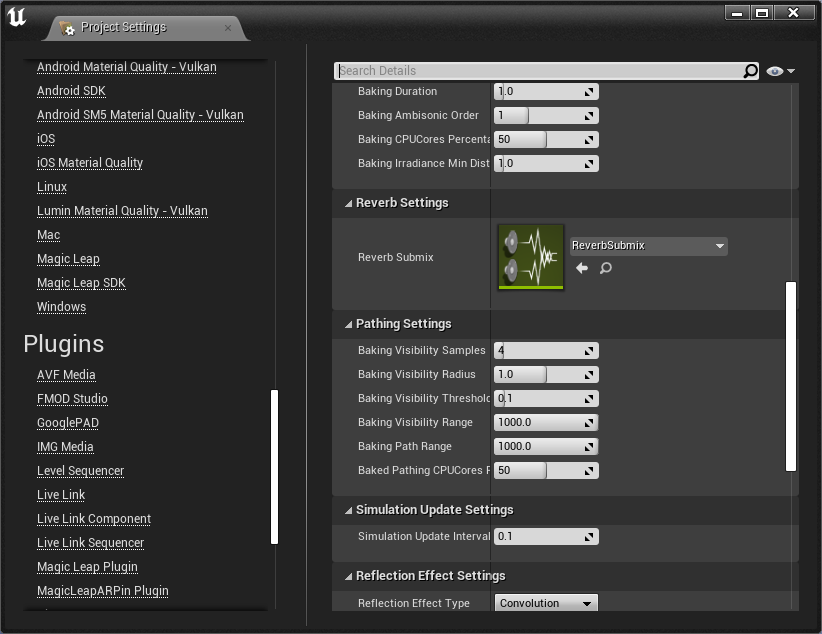

Click Plugins > Steam Audio to open the Steam Audio Settings.

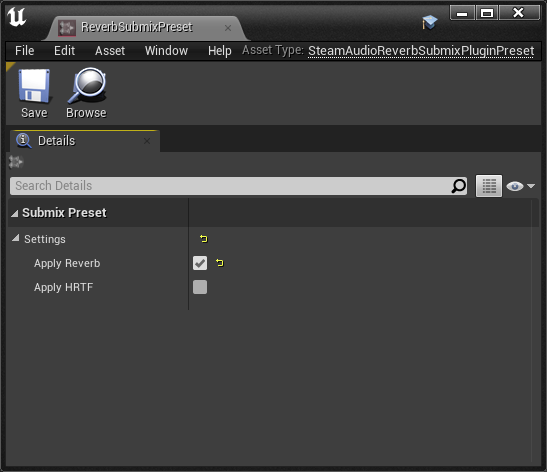

Under Reverb Settings, set Reverb Submix to a new or existing Sound Submix asset, then double-click the asset to open it.

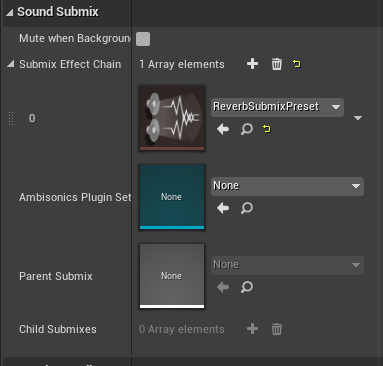

Under Sound Submix, click the + icon next to Submix Effect Chain to add a new effect to the submix.

Use the drop-down that appears to select a new or existing Submix Effect Preset asset, then double-click the asset to open it.

Under Submix Preset, check Apply Reverb.

Select the actor containing the Audio component to which you want to apply the listener-centric reverb, then select the Audio component in the Details tab.

In the Details tab, under Attenuation (Reverb), check Enable Reverb Send.

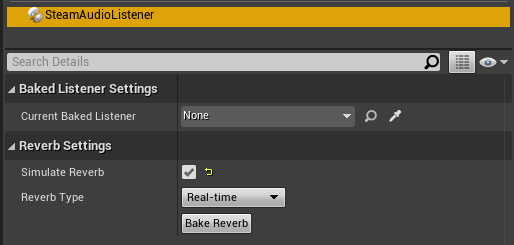

Select any actor in your level (a good choice might be the Player Start actor).

In the Details tab, click Add Component > Steam Audio Listener.

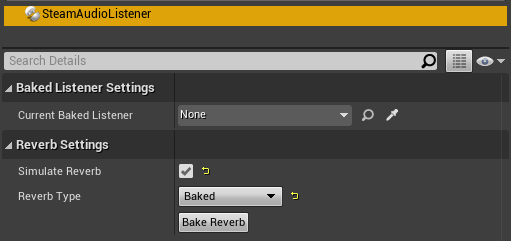

On the Steam Audio Listener component, under Reverb Settings, check Simulate Reverb.

Steam Audio will now use real-time ray tracing to simulate physics-based reverb. You can control many aspects of this simulation, including how many rays are traced, the length of the reverb tail, whether the reverb is rendered a convolution reverb, and much more. Since modeling physics-based reverb is CPU-intensive, you can (and typically will) pre-compute reverb throughout your level. You can even offload simulation as well as rendering work to the GPU. For more information, see Steam Audio Reverb, Steam Audio Listener, and Steam Audio Settings.

Create sound probes for baked sound propagation

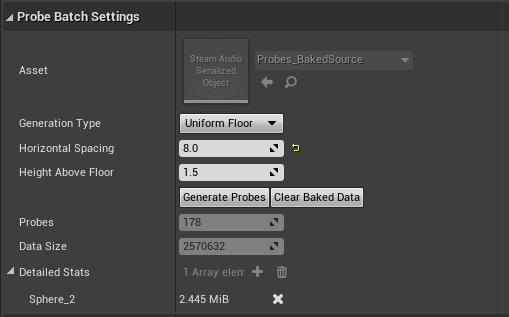

Modeling reflections and reverb can be very CPU-intensive. For levels with mostly static geometry, you can pre-compute (or bake) these effects in the editor. Before doing so, you must create one or more sound probes, which are the points at which Steam Audio will simulate reflections or reverb when baking. At run-time, the source and listener positions relative to the probes are used to quickly estimate the reflections or reverb. To set up sound probes:

In the Place Actors tab, click Volumes, then drag a Steam Audio Probe Volume actor into your level. Adjust the size and shape of the Steam Audio Probe Volume like any other volume actor.

In the Details tab, under Probe Batch Settings, click Generate Probes.

Steam Audio will generate several probes within the volume contained by the probe batch. You can configure how many probes are created, and how they are placed. For more information, see Steam Audio Probe Volume.

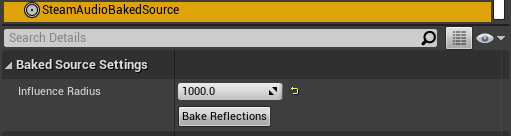

Bake reflections from a static source

If an audio source doesn’t move (or only moves within a small distance), you can bake reflections for it. To do this:

Select the actor that is at the static source’s position.

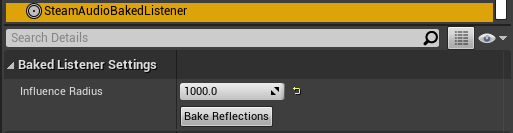

In the Details tab, click Add Component > Steam Audio Baked Source.

Click Bake Reflections. Baking may take a while to complete.

Select the actor containing the Audio component to which you want to apply reflections.

Make sure a Steam Audio Source component is attached to the actor, and Simulate Reflections is checked.

Set Reflections Type to Baked Static Source.

Set Current Baked Source to the actor containing the Steam Audio Baked Source added in step 2.

You can control many aspects of the baking process. For more information, see Steam Audio Source, Steam Audio Baked Source, and Steam Audio Settings.

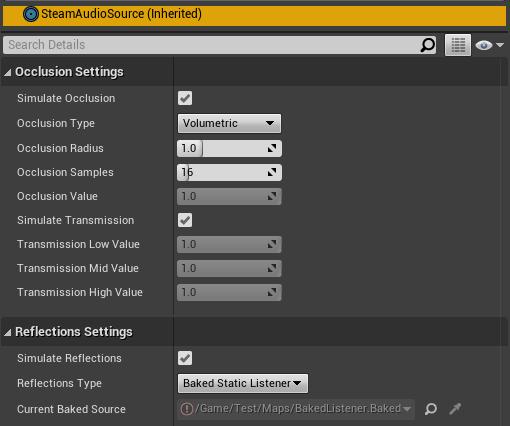

Bake reflections to a static listener

In some applications, the listener may only be able to teleport between a few pre-determined positions. In this case, you can bake reflections for any moving audio source. To do this:

Select (or create, if needed) an actor at one of the listener positions.

In the Details tab, click Add Component > Steam Audio Baked Listener.

Click Bake Reflections. Baking may take a while to complete.

Select the actor containing the Audio component to which you want to apply reflections.

Make sure a Steam Audio Source component is attached, and Simulate Reflections is checked.

Set Reflections Type to Baked Static Listener.

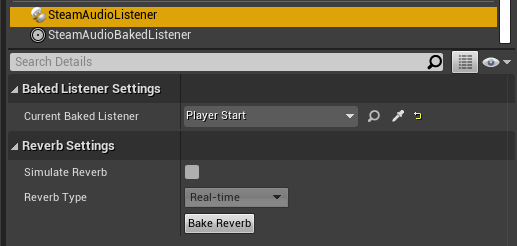

Select any actor in your level (a good choice might be the Player Start actor).

In the Details tab, click Add Component > Steam Audio Listener.

Set Current Baked Listener to the actor containing the Steam Audio Baked Listener added in step 2.

Typically, you would use scripting to control the value of Current Baked Listener every time the listener teleports to a new position.

You can control many aspects of the baking process. For more information, see Steam Audio Listener, Steam Audio Baked Listener, and Steam Audio Settings.

Bake physics-based reverb

You can bake physics-based reverb throughout a level, if the geometry is mostly static. To do this:

Select any actor in your level (a good choice might be the Player Start actor).

Make sure a Steam Audio Listener component is attached, and Simulate Reverb is checked.

Set Reverb Type to Baked.

Click Bake Reverb.

You can control many aspects of the baking process. For more information, see Steam Audio Listener and Steam Audio Settings.

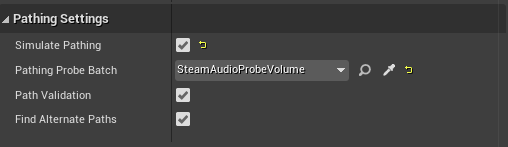

Model sound paths from a moving source to a moving listener

Note

This feature currently requires the use of third-party audio middleware, due to a bug/limitation in Unreal’s built-in audio engine. This issue may be resolved in a future release of Unreal Engine.

You may want to model sound propagation from a source to the listener, along a long, complicated path, like a twisting corridor. The main goal is often to ensure that the sound is positioned as if it’s coming from the correct door, window, or other opening. This is known as the pathing or portaling problem.

While you can solve this by enabling reflections on an audio source, it would require too many rays (and so too much CPU) to simulate accurately. Instead, you can use Steam Audio to bake pathing information in a probe volume, and use it to efficiently find paths from a moving source to a moving listener. To do this:

Select the Steam Audio Probe Volume actor you want to bake pathing information for.

In the Details tab, click Bake Pathing.

Select the actor containing the Audio component you want to enabling pathing effects for.

Make sure a Steam Audio Source component is attached.

Check Simulate Pathing.

You can control many aspects of the baking process, as well as the run-time path finding algorithm. For more information, see Steam Audio Source, Steam Audio Probe Volume, and Steam Audio Settings.

Enable GPU acceleration

Simulating and rendering complex sound propagation effects is very compute-intensive. For example, rendering long convolution reverbs with high Ambisonic order, or rendering many sources with reflections enabled, can cause the audio thread to use significant CPU time, which may lead to audible artifacts. And while Steam Audio runs real-time simulation in a separate thread, simulating large numbers of sources or tracing many millions of rays may result in a noticeable lag between the player moving and the acoustics updating to match.

You can choose to offload some or all of the most compute-intensive portions of Steam Audio to the GPU. This can be useful in several ways:

You can run convolution reverb on the GPU, which lets you run very long convolutions with many channels, without blocking the audio thread.

You can run real-time reverb or reflection simulations on the GPU, which results in much more responsive updates.

You can use the GPU while baking reverb or reflections, which allows designers to spend much less time waiting for bakes to complete.

To enable GPU acceleration for real-time simulations or baking:

In the main menu, click Edit > Project Settings.

Click Plugins > Steam Audio to open the Steam Audio Settings.

Under Ray Tracer Settings, set Scene Type to Radeon Rays.

Radeon Rays is an OpenCL-based GPU ray tracer that works on a wide range of GPUs, including both NVIDIA and AMD models. Radeon Rays support in Steam Audio is available on Windows 64-bit only.

To enable GPU acceleration for convolution reverb:

In the main menu, click Edit > Project Settings.

Click Plugins > Steam Audio to open the Steam Audio Settings.

Under Reflection Effect Settings, set Reflection Effect Type to TrueAudio Next.

TrueAudio Next is an OpenCL-based GPU convolution tool that requires supported AMD GPUs. TrueAudio Next support in Steam Audio is available on Windows 64-bit only.

You can configure many aspects of GPU acceleration. In particular, on supported AMD GPUs, you can restrict Steam Audio to use only a portion of the GPU’s compute resources, ensuring that visual rendering and physics simulations can continue to run at a steady rate. For more information, see Steam Audio Settings.